The Compounding Effect of Boring AI

A practical operating playbook for turning small automations into large productivity gains

New to Operating?

Check out these reader favorites:

Introduction

Within a year, a high school English teacher predicts, educators won't be able to distinguish between student writing and AI-generated text. While academia grapples with this existential challenge, operators should be quietly using the same technology to unlock 40-50% productivity gains. We need to be focused on the boring stuff. A fascinating New York Times article and survey of 21 professionals using AI at work reveals a set of noteworthy patterns: the highest-impact applications aren't flashy. They're mundane. A restaurant owner selecting wines. A therapist formatting notes. A project coordinator proofreading emails. But when these individual productivity improvements scale across organizational hierarchies, something important happens.

We can apply some math to these scenarios to tell a story every operator needs to understand. Everyone talks about frontier models. We need to be obsessing over the mundane deployments. Calendar triage, intake forms that label themselves, version‑safe templates, quiet tools that reclaim minutes at the task level and compound into hours at the team level, then quarters at the company level.

Plenty of “AI transformations” fail to scale because they chase novelty, not throughput. The firms that are winning start with the dull jobs that block flow, measure time, and then let compounded productivity and learning work its magic.

Seen together, these small tasks share five patterns that compound across teams.

21 Everyday Ways Workers Use AI

(as cataloged by The New York Times, Aug. 11, 2025)

To ground the discussion, here are the specific use cases from the Times piece—organized by me into five practical groupings. The bullets below restate the Times’ examples plainly and non‑numerically for clarity.

1) Documentation & Admin (turn messy inputs into structured outputs)

Type up medical notes — Abridge drafts clinician–patient visit notes inside the EHR.

Write up therapy plans — Free‑text session notes converted to structured SOAP documentation.

Make a bibliography — Generates citations and formats “works cited” to spec.

Handle everyday busywork — Summarizes threads, proofs emails, builds templates, compares long docs.

Create standards‑aligned lesson plans — Drafts multi‑day plans that match stated curricula.

Assist call‑center agents — Real‑time suggestions with links to the correct policy page.

2) Decision Support & Knowledge Retrieval (faster, clearer reasoning)

Review medical literature — Surfaces likely‑relevant papers to read in full.

Explain ‘legalese’ in plain English — Tests clarity and anticipates arguments.

Select wines for a restaurant menu — Shortlists to price and region constraints.

Write code to spec — Code generation that tackles scoped engineering tasks.

3) Quality Control & Compliance (reduce errors before they ship)

Check legal documents in a D.A.’s office — Flags typos, missing fields, mischarges, and improper redactions.

Detect if students are using A.I. — Uses detectors plus judgment to evaluate suspected submissions.

4) Pattern Recognition & Classification (find the signal in noise)

Detect leaks in municipal water systems — Sensors plus models spot anomalous flow/noise.

Digitize and classify a herbarium — Spectral scans route likely IDs and escalate uncertain cases to experts.

Probe how brains encode language — Uses LLM internals to test hypotheses about neural representations.

5) Creative Augmentation & Language/Visual Production (more ideas, better polish)

Use A.I. as a creative muse — Style‑conditioned image grids to spark new directions and critique drafts.

Make product photos look better — Generative fill fixes glare, extends backgrounds, and composes shots.

Help get pets adopted — Generates and iterates campaign concepts; teams adapt the best.

Pick needle, thread, and materials — Produces stitch/pattern suggestions and organized how‑tos.

Rewrite messages more politely — Firm, concise notes that soften tone without losing clarity.

Translate 17th–18th‑century lyrics — Acts as a skeptical tutor on archaic language.

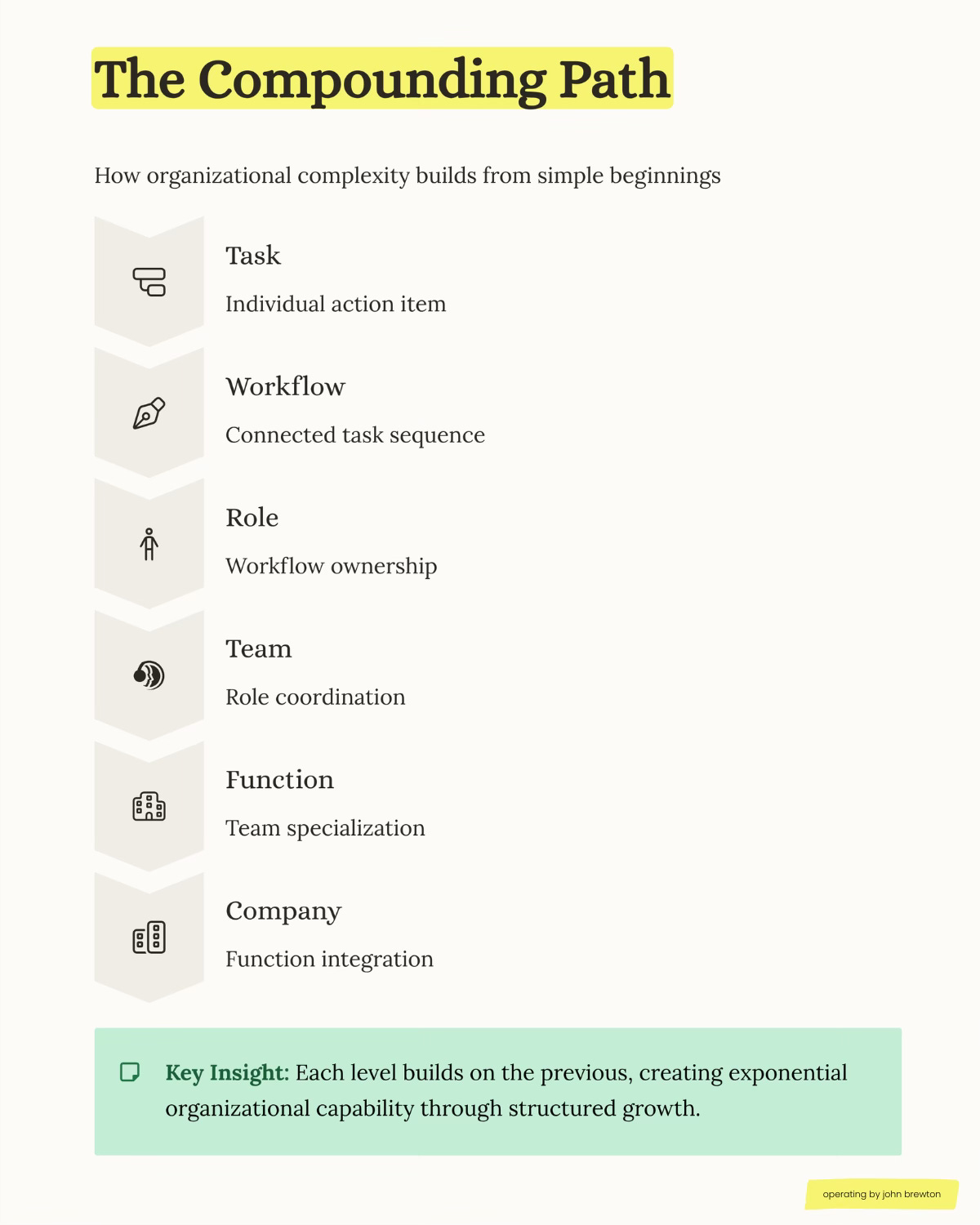

How Simple Tasks Compound

The cases above are local fixes, yet their effects are systemic. Small cuts in cycle time and variance at the task level shorten queues in the workflow, which reduces rework, which frees manager attention, which improves prioritization. Better inputs and faster feedback raise first‑time‑right rates, so less work boomerangs. Information becomes available when needed, so decisions land earlier and with fewer meetings. The result is more output per unit of time without adding headcount.

What changes along the way

Variance falls at the source, so downstream steps stabilize.

Queues shrink, so handoffs and wait time drop.

Defects decline, so managers coach systems.

Signals clarify, so executives set clearer priorities and sequences work better.

Cash moves faster as cycle times compress and error rates fall.

This is why “Boring AI” matters. The value does not come from novelty, it comes from disciplined reductions in time, variance, and defects that multiply across the org chart. Measure hours returned and escaped defects at the edges to enable throughput and working capital to improve at the core.

Operating Model and Approach

We use a five‑pattern taxonomy to classify work, an AI leverage pyramid, a scoreboard to track hours returned, cycle time, first‑time‑right, escaped defects, and a phased roadmap that moves from documentation to micro‑automation to decision support to role redesign.

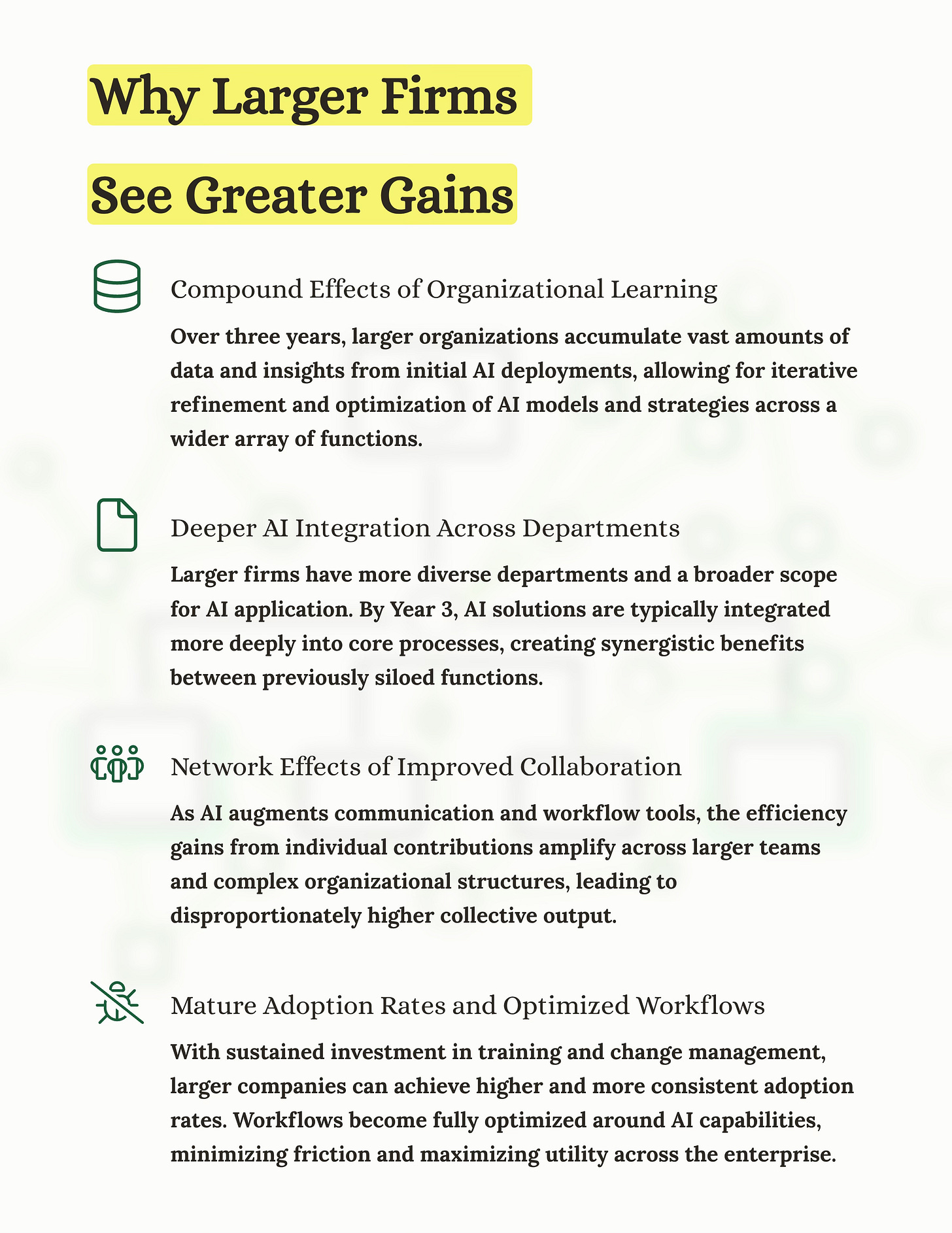

The Quantitative Reality: how 4% becomes 40%

Start simple. Assume each knowledge worker saves 4 percent of their week through small automations: intake classification, formatting, light drafting, file naming, and task routing. Alone, it looks trivial. In practice, it unlocks second‑order effects.

Queue compression: fewer handoffs, shorter waits.

Error prevention: standardized inputs reduce rework.

Context availability: decisions made with the right data, on time.

Over 6 to 12 months, those effects compound across roles:

Executive work: 5% time to strategy, clearer priorities

Middle managers: 15–20% time to coaching and review

ICs: 25–35% time to higher‑value work

When individual contributors move faster with fewer defects, managers spend less time chasing status and more time improving systems. Executives then have cleaner signals, which reduces thrash. The company does not only “get 4 percent.” The entire operating curve shifts.

Order‑of‑magnitude guide, not a promise

• $10M firm: 10–15% productivity lift in year three

• $100M firm: 18–22%

• $500M firm: 25–30%

Outcomes vary by baseline discipline. Data integrity, as always seems to be the case, can stall the flywheel, which is why documentation and standard work sit at the bottom of any durable stack.

The AI Leverage Pyramid

Think of leverage as a stack, bottom to top:

Documentation and templates: reduce variance at the source.

Automation of micro‑tasks: classification, extraction, formatting.

Decision support: draft, summarize, compare, suggest.

Guardrails: quality checks and exception routing.

Learning loop: use outcomes to improve steps 1–4.

Skip the base and the top wobbles. Build the base and the rest compounds.

Five categories where “boring AI” compounds fast

1) Documentation burden elimination

Auto‑summaries of meetings, version‑safe SOPs, consistent naming, and metadata on every file. This preserves organizational memory and shortens onboarding.

2) Decision support acceleration

Drafts for emails, briefs, RFP responses, and first‑pass analyses that are 70–80 percent complete, then human‑finished. Managers review decisions with the relevant context pre‑attached.

3) Quality control automation

Checklists that never forget. Style, compliance, and policy checks before work moves. Exceptions route to a human with the right artifact attached.

4) Pattern recognition at scale

Recurring issues surface from tickets, notes, and chats. The same engine proposes merges, dedupes, and next best actions.

5) Creative augmentation

On‑brand first passes for sales decks, job posts, and support macros. Humans tune tone, facts, and edge cases.

Mundane AI in the wild

As outlined above from the August 2025 New York Times report, these twenty‑one everyday uses map cleanly to the five patterns; the benefits arrive as cleaner inputs and shorter queues, not flashy replacement. The examples validate each bucket: the benefits arrive as cleaner inputs and shorter queues, not flashy replacement. Representative cases:

• Lesson plans that meet state standards [Decision support].

• Clinical notes drafted from visits [Documentation].

• Paperwork checks before arraignment [Quality control].

• Agent assist surfacing tax pages live [Quality control].

• Leak signals from hydrant sensors [Pattern recognition].

• Herbarium IDs from spectral scans [Pattern recognition].

• Bibliographies and citations on demand [Documentation].

• Generative fill for product photos [Creative augmentation].Across roles, workers report less busywork and fewer defects, with the gains showing up first as reduced cycle time and rework. Adoption is already broad, yet mostly quiet. The internal story at the firm is not one about AI, but one about time saved and mad available for more important work.

Where This Works Best:

The $100–$500 Million “sweet spot”

Orgs of this size are complex enough to have handoffs and waste and small enough to change without political gridlock. Resist theatrics. Stack small wins (small becomes big quickly and momentum builds from minuscule steps), then scale what proves out.

Implementation Roadmap

Phase 1, months 1–3: Document the mundane

• Inventory five “always on” processes by throughput and pain.

• Standardize inputs, naming, and templates.

• Instrument time, queue length, rework rate, and escaped defects.

Phase 2, months 4–6: Verify and scale

• Add micro‑automations at handoffs.

• Insert guardrails that block bad work from moving.

• Publish a weekly “defects prevented” and “hours returned” report.

Phase 3, months 7–12: Advance to intelligence

• Introduce retrieval for internal knowledge.

• Pilot decision support where variance is high and stakes are moderate.

• Formalize exception paths and who owns them.

Phase 4, year 2+: Organizational transformation

• Rebase roles on the new cycle time.

• Shift managers toward coaching and system improvement.

• Update incentives to reward throughput, first‑time‑right, and learning.

Most large firms stall here because pilots never connect to process design and people fear the implications. Solve that with transparency, constant resounding communication, iterative role design, and a focus on time saved and new opportunities created over headcount reduced.

The Contrarian Truth About AI ROI

ROI shows up first as cycle time and defect reduction (soft-savings), not as line‑item savings (hard savings). Treat reclaimed time as capacity. Use it to increase output, improve quality, and shorten cash cycles. The “lump of labor” story is a trap. Productivity waves reallocate work and create new categories, even as they compress others. Build feedback loops and highly visible internal dashboards to communicate the changing capabilities visually internally.

Critical success factors

Operational skepticism

Assume your first draft is wrong. Inspect work early, then often.Start with low‑variance, high‑volume tasks

Pick work you do every day. Avoid rare, high‑stakes edge cases until guardrails are solid.Measure time, not headlines

Track hours returned, queues shortened, defects prevented, and time‑to‑decision. Tie gains to throughput and working capital.Protect human advantage

As analysis automates, judgment, relationship work, and creative synthesis rise in value. Hire and train accordingly.

Metrics to publish monthly

• Hours returned to teams

• Cycle‑time reduction in top three flows

• Escaped defects per 1,000 units

• Percent of work passing first‑time‑right

• Share of work covered by templates and guardrails

Common failure modes

• Pilots untethered from core workflows.

• Dirty or inconsistent inputs.

• No visible “hours returned” scoreboard.

• Attempting replacement before augmentation.

Operating Perspective

Five Takeaways For Operators:

✅ Document first — Standardize inputs, naming, and templates before you automate; variance controlled at the source compounds everywhere else.

✅ Measure throughput, not headlines — Track hours returned, cycle time, first‑time‑right, escaped defects, WIP and queue length; publish the scoreboard monthly.

✅ Start where volume is high and variance is low — Automate handoffs, add guardrails, then layer decision support; avoid rare, high‑stakes edge cases early.

✅ Run the learning loop — Use outcomes to update SOPs, templates, prompts, and routing rules; retire steps that no longer add value.

✅ Redeploy human advantage — Rebase roles on the new cycle time, train for judgment and synthesis, and align incentives to throughput and quality, not keystrokes.

Notes and further reading from Operating by John Brewton

• “The Problem of AI‑Transformation for Large Companies” — why gen‑AI pilots stall, and how to avoid it.

• “Bad Bad Bad (Data) Good Better Best (Data)” — why data integrity is the first constraint.

• “The Great Inversion” — why human skills become the premium layer.

• “Operating Economics: The Lump of Labor Fallacy” — displacement versus growth, with history.

• “What Gets Measured Gets Managed” — documentation as organizational memory.

If you’d like to work together, I’ve carved out some time to work 1:1 each month with a few, top notch Founders and Operators. You can find the details here.

John Brewton documents the history and future of operating companies at Operating by John Brewton. He is a graduate of Harvard University and began his career as a Phd. student in economics at the University of Chicago. After selling his family’s B2B industrial distribution company in 2021, he has been helping business owners, founders and investors optimize their operations ever since. He is the founder of 6A East Partners, a research and advisory firm asking the question: What is the future of companies? He still cringes at his early LinkedIn posts and loves making content each and everyday, despite the protestations of his beloved wife, Fabiola, at times.

This piece nails it, focusing on mundane AI applications is the real move. Small, consistent productivity gains are what truly drive an organization forward, not chasing flashy, one-off projects.

This is the kind of thinking every operator needs in their bloodstream. John Brewton shows that the biggest ROI from AI isn’t flashy-it’s quiet, cumulative, and hidden in the mundane. Forget robot overlords. Think calendar triage, inbox triage, and rework prevention.

The leverage pyramid is brilliant: documentation at the base, decision support at the top. That’s how you build a future-proof org without hype.

If you had to pick just one metric to track AI’s compounding value across an organisation, which would you bet on?